Just like any technical or business IT capability, one pre-requisite for adoption is understanding the WHAT and the WHY; and a clear definition of these aspects at the beginning of the Machine Learning journey is critical to charting the course.

2016年11月30日 星期三

2016年11月28日 星期一

Who knows why ontologies are so hidden ?

Ontologies are structural frameworks for organizing information and are used as knowledge representation. Ontology management supports and expands data modeling methodologies to exploit the business value locked up in information silos. Information leaders must embrace this emerging trend.”

Guido De Simoni - Gartner (23/02/2015)

In short, ontologies become increasingly crucial for several domains and especially for domains which are always more investigated :

Guido De Simoni - Gartner (23/02/2015)

In short, ontologies become increasingly crucial for several domains and especially for domains which are always more investigated :

- Knowledge Management, where ontologies allow a clear, deep and shared understanding of any knowledge domain ...

- Semantic Web, where ontologies can give meaning to published data ...

- Big Data, where ontologies allow semantic integration across several data sources ...

- Software Engineering, where ontologies can play the role of a formal business specification ...

- Artificial Intelligence, where ontologies can represent required knowledge for reasoning ...

- Robotics, where ontologies can help robots to be aware about their environment ...

Ontologies role in Big Data initiatives

Why Ontologies

In short, people interpret, machines don’t. As such, an effort must be undertaken in order to support adequate usage of digital resources. Ontologies are useful when meanings need to be formally defined.

Ambiguity for computer

The problem is that the word “rice“ or “cook” has no meaning, or semantic content, to the computer.

https://www.linkedin.com/pulse/big-data-initiatives-ontologies-role-pete-ianace?articleId=8647865779102847774#comments-8647865779102847774&trk=sushi_topic_posts_guest

In short, people interpret, machines don’t. As such, an effort must be undertaken in order to support adequate usage of digital resources. Ontologies are useful when meanings need to be formally defined.

Ambiguity for computer

The problem is that the word “rice“ or “cook” has no meaning, or semantic content, to the computer.

https://www.linkedin.com/pulse/big-data-initiatives-ontologies-role-pete-ianace?articleId=8647865779102847774#comments-8647865779102847774&trk=sushi_topic_posts_guest

2016年11月8日 星期二

Top Algorithms and Methods Used by Data Scientists

The following table shows usage of different algorithms types: Supervised, Unsupervised, Meta, and other by Employment type.

Table 1: Algorithm usage by Employment Type

Table 1: Algorithm usage by Employment Type

| Employment Type | % Voters | Avg Num Algorithms Used | % Used Super- vised | % Used Unsuper- vised | % Used Meta | % Used Other Methods |

|---|---|---|---|---|---|---|

| Industry | 59% | 8.4 | 94% | 81% | 55% | 83% |

| Government/Non-profit | 4.1% | 9.5 | 91% | 89% | 49% | 89% |

| Student | 16% | 8.1 | 94% | 76% | 47% | 77% |

| Academia | 12% | 7.2 | 95% | 81% | 44% | 77% |

| All | 8.3 | 94% | 82% | 48% | 81% |

Table 2: Top 10 Algorithms + Deep Learning usage by Employment Type

| Algorithm | Industry | Government/Non-profit | Academia | Student | All |

|---|---|---|---|---|---|

| Regression | 71% | 63% | 51% | 64% | 67% |

| Clustering | 58% | 63% | 51% | 58% | 57% |

| Decision | 59% | 63% | 38% | 57% | 55% |

| Visualization | 55% | 71% | 28% | 47% | 49% |

| K-NN | 46% | 54% | 48% | 47% | 46% |

| PCA | 43% | 57% | 48% | 40% | 43% |

| Statistics | 47% | 49% | 37% | 36% | 43% |

| Random Forests | 40% | 40% | 29% | 36% | 38% |

| Time series | 42% | 54% | 26% | 24% | 37% |

| Text Mining | 36% | 40% | 33% | 38% | 36% |

| Deep Learning | 18% | 9% | 24% | 19% | 19% |

2016年11月7日 星期一

Machine Learning: A Complete and Detailed Overview

The 10 Algorithms Machine Learning

http://www.kdnuggets.com/2016/08/10-algorithms-machine-learning-engineers.html

http://www.kdnuggets.com/2016/08/10-algorithms-machine-learning-engineers.html

|

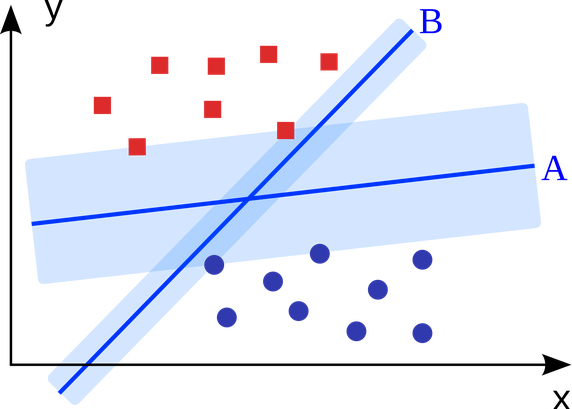

| Support Vector Machines |

|

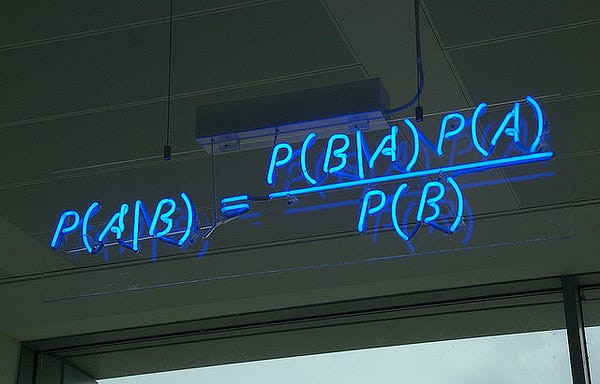

| Naïve Bayes Classification |

|

| Decision Trees |

|

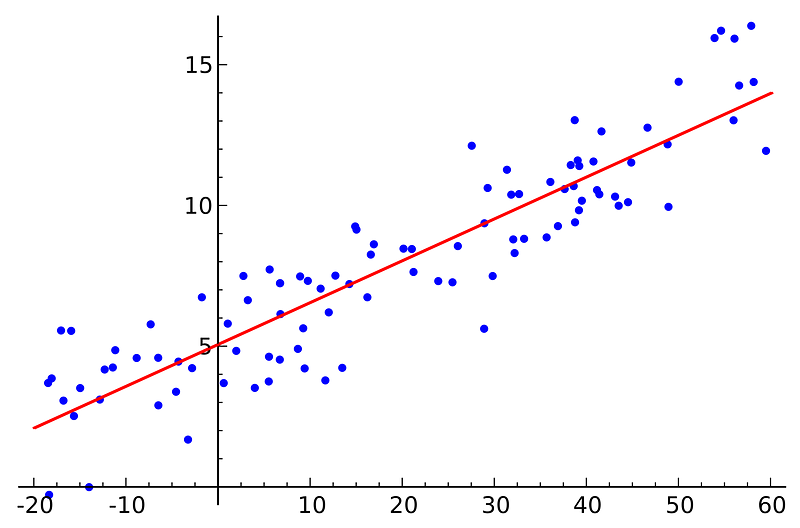

| Ordinary Least Squares Regression |

|

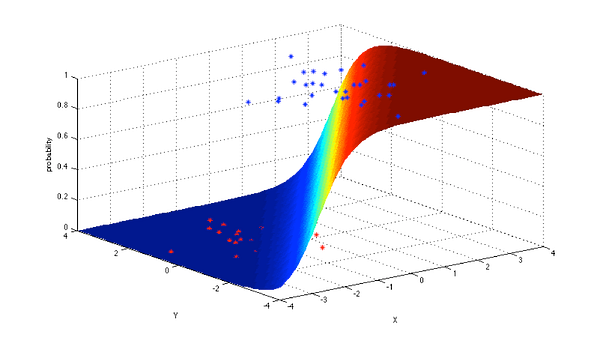

| Logistic Regression |

|

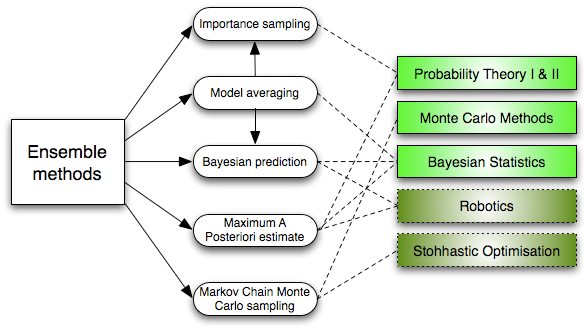

| Ensemble Methods |

|

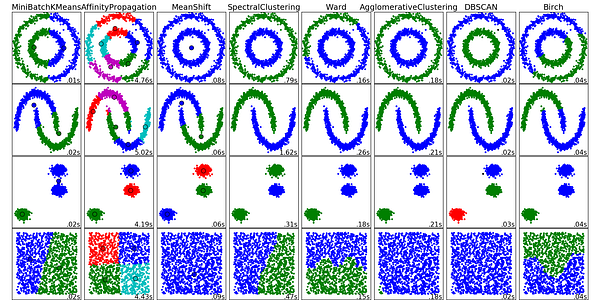

| Clustering Algorithms |

|

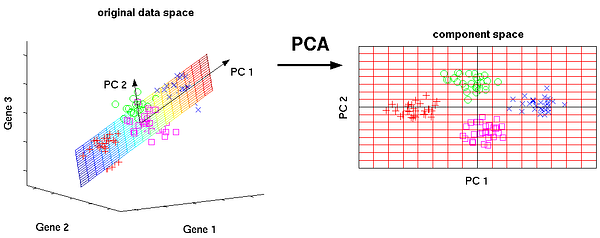

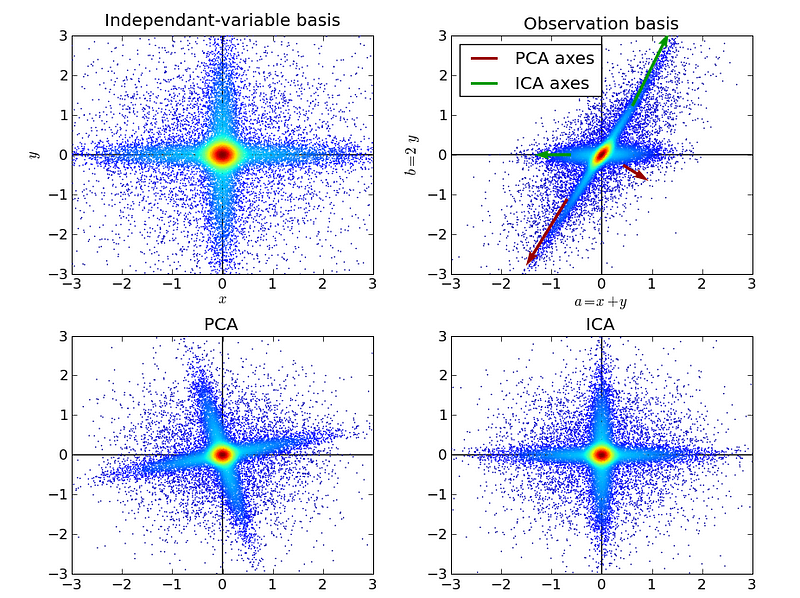

| Principal Component Analysis |

|

| Independent Component Analysis |

|

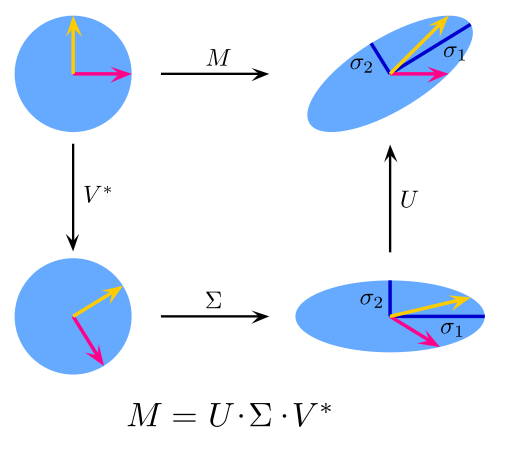

| Singular Value Decomposition. |

The cognitive platform

These cognitive services can be fueled by publically available web and social data, your own private information, or data you acquire from data partners or others. The APIs on this platform can be grouped into four categories:

- Language: A set of APIs including, but not limited to, classifying natural language text, conversations, entity extraction, semantic concept extraction, document conversion, language translation, passage retrieval and ranking, relationship extraction, tone analysis, and so on.

- Speech: A set of APIs for converting speech to text and text to speech, including the ability to train with your own language models.

- Vision: APIs to find new insights, derive significant value, and take meaningful action from images.

- Data Insights: Pre-enriched content (for example, news and blogs) with natural language processing to allow for highly targeted search and trend analysis.

Each of these APIs can perform a different task, and in combination they can be adapted to solve numerous business problems or create deeply engaging experiences. When you combine these cognitive services and overlay with (traditional) data analytics capabilities, it facilitates for complex discoveries, predictive insights, and engines to carry the decisions that are driven by the insights.

The Machine Learning Framework

An average data scientist deals with loads of data daily. Some say over 60-70% time is spent in data cleaning, munging and bringing data to a suitable format such that machine learning models can be applied on that data. This post focuses on the second part, i.e., applying machine learning models, including the preprocessing steps. The pipelines discussed in this post come as a result of over a hundred machine learning competitions that I’ve taken part in. It must be noted that the discussion here is very general but very useful and there can also be very complicated methods which exist and are practised by professionals.

http://blog.kaggle.com/2016/07/21/approaching-almost-any-machine-learning-problem-abhishek-thakur/

http://blog.kaggle.com/2016/07/21/approaching-almost-any-machine-learning-problem-abhishek-thakur/

Architecture: Real-Time Stream Processing for IoT

This article describes the infrastructure to handle streams of data fed from millions of intelligent devices in the Internet of Things (IoT). The architecture for this type of real-time stream processing must deal with data import, processing, storage, and analysis of hundreds of millions of events per hour. The architecture below depicts just such a system.

IoT Stream Processing Architecture

https://cloud.google.com/solutions/architecture/real-time-stream-processing-iot

IoT Stream Processing Architecture

https://cloud.google.com/solutions/architecture/real-time-stream-processing-iot

Data Science Automation For Big Data and IoT Environments

The purpose of data science is not only to do machine learning or statistical analysis, but also to derive insights out of the data that a user with no statistics knowledge can understand.

The half of data science that requires manual intervention is still to be automated. However, those are areas that involve the experience and wisdom of a people: a data scientist, a business expert, a software developer, a data integrator, everyone who currently contributes to making a data-science project operational. This makes it difficult to automate every aspect of data science. However, we can think of data science automation as a two level architecture, wherein:

– Different data science disciplines/components are automated

– All the individual automated components are interconnected to form a coherent data-science system

Figure 1. The required elements of an automated data science system.

訂閱:

意見 (Atom)

Types of Bots: An Overview

Learn more about all the different varieties of bots, and what they can do for you http://botnerds.com/types-of-bots/ In this articl...

-

Knowledge-based Artificial Intelligence http://www.mkbergman.com/1816/knowledge-based-artificial-intelligence/ A recent interview with a n...

-

http://www.i-scoop.eu/artificial-intelligence-cognitive-computing/ 1 The historical issue with artificial intelligence – is cognitive bet...

-

Just like any technical or business IT capability, one pre-requisite for adoption is understanding the WHAT and the WHY; and a clear definit...